All Categories

Featured

Table of Contents

- – The Main Principles Of Machine Learning Bootca...

- – The 5-Minute Rule for New Course: Genai For So...

- – Some Known Questions About Best Machine Learn...

- – The Buzz on Online Machine Learning Engineeri...

- – Little Known Questions About 6 Steps To Beco...

- – Fascination About Artificial Intelligence So...

- – The Software Developer (Ai/ml) Courses - Car...

Some people believe that that's cheating. Well, that's my whole job. If someone else did it, I'm going to use what that person did. The lesson is placing that apart. I'm requiring myself to think with the feasible remedies. It's more about consuming the material and trying to use those concepts and much less about locating a collection that does the job or searching for someone else that coded it.

Dig a little deeper in the mathematics at the beginning, just so I can build that foundation. Santiago: Ultimately, lesson number seven. This is a quote. It claims "You have to recognize every information of an algorithm if you desire to utilize it." And after that I say, "I think this is bullshit suggestions." I do not think that you have to recognize the nuts and screws of every formula before you use it.

I've been making use of semantic networks for the longest time. I do have a sense of how the gradient descent works. I can not explain it to you now. I would have to go and examine back to in fact get a far better instinct. That does not indicate that I can not solve points using neural networks, right? (29:05) Santiago: Attempting to require individuals to assume "Well, you're not going to be effective unless you can describe every information of exactly how this works." It returns to our sorting instance I believe that's just bullshit advice.

As a designer, I've worked with many, lots of systems and I've utilized many, lots of things that I do not understand the nuts and screws of how it functions, also though I understand the impact that they have. That's the final lesson on that thread. Alexey: The funny point is when I consider all these libraries like Scikit-Learn the formulas they use inside to execute, for instance, logistic regression or another thing, are not the same as the algorithms we research in artificial intelligence classes.

The Main Principles Of Machine Learning Bootcamp: Build An Ml Portfolio

Also if we tried to discover to obtain all these essentials of maker knowing, at the end, the formulas that these collections make use of are various. Santiago: Yeah, absolutely. I think we need a great deal a lot more pragmatism in the sector.

By the way, there are two different courses. I typically speak to those that desire to operate in the industry that wish to have their effect there. There is a course for scientists which is totally different. I do not dare to discuss that since I don't know.

Right there outside, in the sector, materialism goes a lengthy way for sure. (32:13) Alexey: We had a remark that stated "Feels more like motivational speech than speaking about transitioning." Maybe we need to change. (32:40) Santiago: There you go, yeah. (32:48) Alexey: It is a great motivational speech.

The 5-Minute Rule for New Course: Genai For Software Developers

One of the points I desired to ask you. Initially, let's cover a pair of things. Alexey: Allow's begin with core devices and frameworks that you require to learn to in fact shift.

I recognize Java. I understand exactly how to use Git. Perhaps I know Docker.

What are the core devices and structures that I require to discover to do this? (33:10) Santiago: Yeah, definitely. Excellent concern. I assume, number one, you must begin finding out a little bit of Python. Because you already know Java, I do not believe it's mosting likely to be a big shift for you.

Not since Python is the same as Java, however in a week, you're gon na obtain a great deal of the differences there. You're gon na have the ability to make some progress. That's primary. (33:47) Santiago: After that you get particular core devices that are going to be made use of throughout your whole career.

Some Known Questions About Best Machine Learning Courses & Certificates [2025].

That's a collection on Pandas for data manipulation. And Matplotlib and Seaborn and Plotly. Those 3, or one of those 3, for charting and presenting graphics. You get SciKit Learn for the collection of maker knowing formulas. Those are tools that you're going to need to be using. I do not suggest simply going and learning more about them out of the blue.

Take one of those programs that are going to start introducing you to some problems and to some core concepts of maker discovering. I do not remember the name, but if you go to Kaggle, they have tutorials there for cost-free.

What's great regarding it is that the only demand for you is to recognize Python. They're going to offer a problem and tell you exactly how to utilize decision trees to address that certain issue. I think that procedure is exceptionally effective, due to the fact that you go from no equipment finding out history, to understanding what the issue is and why you can not fix it with what you recognize right currently, which is straight software program design practices.

The Buzz on Online Machine Learning Engineering & Ai Bootcamp

On the other hand, ML designers focus on structure and releasing device understanding designs. They concentrate on training models with data to make predictions or automate tasks. While there is overlap, AI designers handle even more diverse AI applications, while ML engineers have a narrower concentrate on maker learning formulas and their practical implementation.

Artificial intelligence engineers concentrate on developing and releasing device discovering models right into production systems. They service engineering, making sure models are scalable, effective, and incorporated into applications. On the various other hand, information scientists have a wider duty that includes data collection, cleaning, exploration, and structure models. They are often in charge of extracting understandings and making data-driven choices.

As companies increasingly take on AI and device understanding modern technologies, the demand for knowledgeable specialists expands. Device knowing engineers function on cutting-edge tasks, contribute to technology, and have competitive salaries.

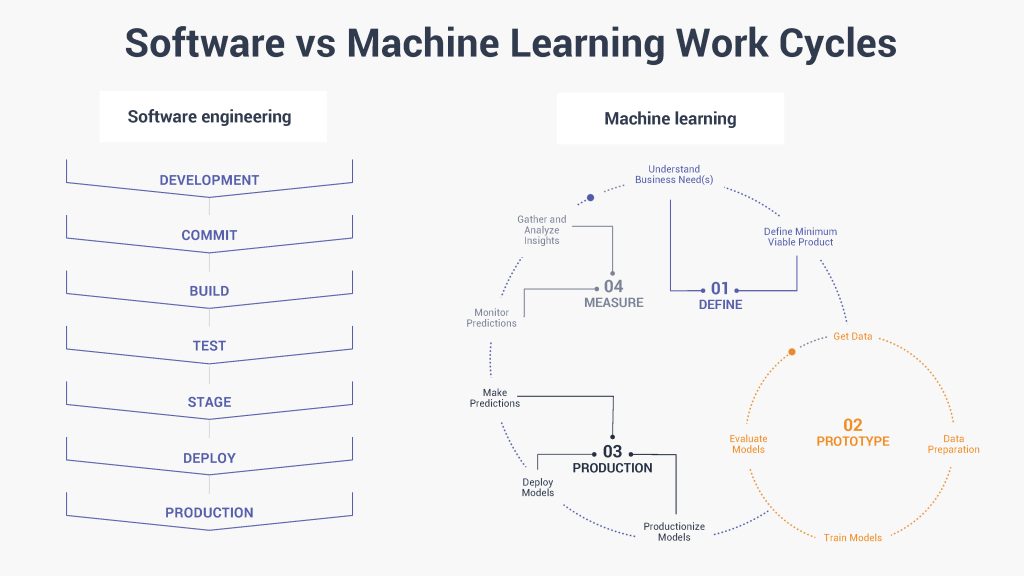

ML is basically various from traditional software program development as it focuses on teaching computers to gain from data, instead of programming specific policies that are executed methodically. Unpredictability of outcomes: You are most likely made use of to composing code with predictable outputs, whether your function runs when or a thousand times. In ML, nonetheless, the end results are much less particular.

Pre-training and fine-tuning: Just how these versions are educated on vast datasets and after that fine-tuned for details jobs. Applications of LLMs: Such as text generation, belief evaluation and details search and access. Papers like "Focus is All You Required" by Vaswani et al., which presented transformers. Online tutorials and programs concentrating on NLP and transformers, such as the Hugging Face course on transformers.

Little Known Questions About 6 Steps To Become A Machine Learning Engineer.

The ability to manage codebases, merge changes, and settle conflicts is equally as vital in ML advancement as it remains in conventional software program jobs. The abilities developed in debugging and testing software program applications are extremely transferable. While the context could transform from debugging application reasoning to identifying problems in data processing or design training the underlying principles of organized investigation, hypothesis screening, and iterative improvement are the same.

Device discovering, at its core, is greatly dependent on statistics and chance theory. These are critical for recognizing how formulas pick up from data, make predictions, and examine their performance. You need to consider ending up being comfortable with principles like statistical relevance, distributions, hypothesis testing, and Bayesian thinking in order to layout and interpret models efficiently.

For those interested in LLMs, a comprehensive understanding of deep understanding architectures is beneficial. This includes not just the technicians of semantic networks but likewise the style of particular versions for different use situations, like CNNs (Convolutional Neural Networks) for photo handling and RNNs (Recurring Neural Networks) and transformers for consecutive information and natural language handling.

You ought to recognize these concerns and learn strategies for determining, reducing, and communicating concerning predisposition in ML versions. This consists of the possible impact of automated choices and the moral implications. Lots of models, specifically LLMs, call for considerable computational sources that are typically given by cloud platforms like AWS, Google Cloud, and Azure.

Building these abilities will not only assist in an effective shift into ML however additionally guarantee that developers can add effectively and responsibly to the innovation of this dynamic area. Theory is important, however nothing beats hands-on experience. Start dealing with projects that allow you to use what you've discovered in a useful context.

Take part in competitors: Join systems like Kaggle to take part in NLP competitors. Develop your tasks: Beginning with simple applications, such as a chatbot or a text summarization device, and slowly raise intricacy. The area of ML and LLMs is rapidly progressing, with new breakthroughs and modern technologies arising on a regular basis. Remaining upgraded with the most up to date research study and patterns is important.

Fascination About Artificial Intelligence Software Development

Contribute to open-source jobs or create blog articles regarding your knowing journey and tasks. As you obtain know-how, start looking for chances to include ML and LLMs into your job, or look for brand-new roles focused on these innovations.

Potential usage cases in interactive software application, such as suggestion systems and automated decision-making. Recognizing uncertainty, fundamental statistical steps, and probability circulations. Vectors, matrices, and their duty in ML formulas. Error reduction strategies and gradient descent explained merely. Terms like model, dataset, features, tags, training, reasoning, and validation. Information collection, preprocessing techniques, model training, examination procedures, and deployment factors to consider.

Choice Trees and Random Forests: User-friendly and interpretable versions. Matching trouble kinds with proper models. Feedforward Networks, Convolutional Neural Networks (CNNs), Persistent Neural Networks (RNNs).

Information flow, improvement, and function engineering strategies. Scalability concepts and efficiency optimization. API-driven strategies and microservices integration. Latency administration, scalability, and version control. Continual Integration/Continuous Release (CI/CD) for ML operations. Design monitoring, versioning, and performance monitoring. Identifying and attending to changes in design performance with time. Addressing efficiency traffic jams and source administration.

The Software Developer (Ai/ml) Courses - Career Path Statements

You'll be presented to three of the most pertinent parts of the AI/ML self-control; overseen understanding, neural networks, and deep understanding. You'll understand the differences in between typical programs and equipment learning by hands-on growth in monitored learning prior to constructing out intricate dispersed applications with neural networks.

This program functions as an overview to equipment lear ... Program More.

Table of Contents

- – The Main Principles Of Machine Learning Bootca...

- – The 5-Minute Rule for New Course: Genai For So...

- – Some Known Questions About Best Machine Learn...

- – The Buzz on Online Machine Learning Engineeri...

- – Little Known Questions About 6 Steps To Beco...

- – Fascination About Artificial Intelligence So...

- – The Software Developer (Ai/ml) Courses - Car...

Latest Posts

10+ Tips For Preparing For A Remote Software Developer Interview

Mastering The Software Engineering Interview – Tips From Faang Recruiters

Statistics & Probability Questions For Data Science Interviews

More

Latest Posts

10+ Tips For Preparing For A Remote Software Developer Interview

Mastering The Software Engineering Interview – Tips From Faang Recruiters

Statistics & Probability Questions For Data Science Interviews